Engineering Intelligence

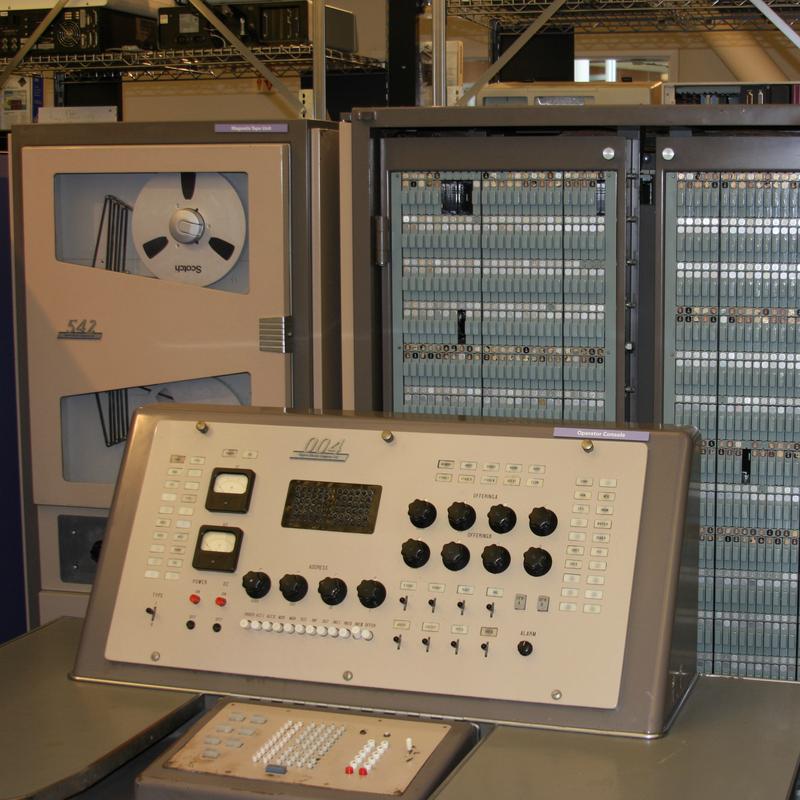

( Pargon/flickr

)

Transcript

BROOKE GLADSTONE: Artificial intelligence is a contentious field. To the people who own it, the engineers, it’s about creating systems that can adjust their behavior based on data gleaned from past experience or new information. Systems that can establish sub-goals for themselves to meet the broader goals of their designers, machines that, in their word, “think.”

But philosophers and others object, saying that to call that thinking is to imply that the complex web of sensations and culture that guide our thoughts can be reduced to code and that our minds are merely computers made of meat. Engineers respond with a shrug, a yawn and the observation that the proof is in the processing. Jerry Kaplan is an engineer and entrepreneur and a fellow at the Center for Legal Informatics at Stanford University, He says that the attitude of engineers hasn't changed since the day the term “artificial intelligence” was coined.

JERRY KAPLAN: It was held in the summer of 1956. It was a beautiful lazy summer, and it was a conference that was organized by John McCarthy who named the field “artificial intelligence” at that conference. There were three other organizers, so one was Marvin Minsky who at the time was at Harvard and is still kicking around at MIT, as you might know.

BROOKE GLADSTONE: Mm-hmm. [AFFIRMATIVE]

JERRY KAPLAN: Claude Shannon, famous as the father of information theory, and the fourth guy was Nathaniel Rochester, who was the manager of information research at IBM at the time.

BROOKE GLADSTONE: What were they there to talk about?

JERRY KAPLAN: I can actually read a little bit of it. The proposal said, “The study is to proceed on the basis of the conjecture that every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it.” And they went on to say, “We think that a significant advance can be made in one or more of these problems if a carefully selected group of scientists work on it together for a summer.”

BROOKE GLADSTONE: [LAUGHS] These men were not lacking in self-confidence, were they?

JERRY KAPLAN: They were not, and after the conference they made a number of wild predictions and got a great deal of publicity, which ruffled feathers at IBM Research.

The sales force was visiting customers, they were reading about this, and they were getting worried.

BROOKE GLADSTONE: What was IBM selling in 1956?

JERRY KAPLAN: Data processing equipment and really the only company or the primary company that was selling equipment of that kind. But the problem was that the customers were getting nervous about this stuff, because they were concerned that these machines were somehow going to take over their own jobs. IBM decided, in order to avoid this problem, they would close down their AI Group and then they armed their sales force with a response to this concern. And they came up with this little cliché that was, “Computers can only do what they are programmed to do.”

[BROOKE LAUGHS]

And that concept has set the tone, in my opinion, for the last 50 years of the way in which people tend to think of computers. And, unfortunately, it’s no longer true.

BROOKE GLADSTONE: You also sort of hinted that it might be a threat, but I guess you’re talking about to people's jobs, potentially, not to their actual lives or autonomy.

JERRY KAPLAN: Well, the impacts on the job market are going to be extreme. There’s a study that estimated that 47% of the US working population, that their jobs will, in the next five to ten years, come under potential threat of being completely automated. The people who are building these systems are going to have a unique advantage, in terms of skimming off the increased economic value that they’ll be providing to society. So it's going to have a significant impact, and already is having a significant impact, on income inequality.

BROOKE GLADSTONE: So the alphas will get richer and richer and, and the lower castes will just shuffle along with no purpose and not much money.

JERRY KAPLAN: Both the upper and the lower castes. This is the peculiar thing about it. We’re talking about lawyers and doctors, as well as the people to dig ditches and lay pipe. Really, we’re gonna find out that Karl Marx was right. The struggle between capital and labor is very real, but capital is going to win hands down.

BROOKE GLADSTONE: So what’s the distinction between strong AI and weak AI?

JERRY KAPLAN: Strong AI is basically a concept that intelligence is some kind of magic pixie dust that you sprinkle on a machine and it suddenly becomes conscious and aware, and that what we’re trying to do in AI is to duplicate this magical quality.

Now, weak AI is just a disparaging name for what we really see today, which is an engineering approach to artificial intelligence. It's solving specific problems. That might be driving or playing chess. So strong AI is really for the sci-fi freaks, and weak AI is really for the engineers.

BROOKE GLADSTONE: Let’s talk about progress in weak AI. There’s been a lot of it lately, right?

JERRY KAPLAN: Absolutely. What’s driving a revolution in artificial intelligence right now are really three fundamental things, dramatic improvements in computing power, in moeory and networking. Just by way of example, the Watson program that won Jeopardy –

BROOKE GLADSTONE: Mm-hmm.

JERRY KAPLAN: - that had four terabytes of memory available to it to store all of its knowledge. If you look on Amazon today, you can buy 4 terabytes for $150. So we’re talking about a speed of improvement that is utterly astonishing.

The second thing really is breakthroughs in the research in what is commonly called machine learning - these are systems that lean by experience and learn by example – which leads me to the third point. What the internet provides is easy access to enormous mountains of data that can be used to train these systems. So the availability of that data has transformed artificial intelligence.

BROOKE GLADSTONE: When we talk about training, we aren’t really talking about just collecting more and more facts and information, right?

JERRY KAPLAN: It, it really means exactly the same thing. It means when you talk about like training your children, there’s kind of objective written facts that one can study, and that’s why they go to school. There’s things that you tell them, informal knowledge about the way the world really works that’s based on your own experience. That’s commonly called culture, things that you pass on to your children. But the third is simply interacting with the world. So the way your autonomous car is going to learn about the way your driveway’s shaped or whatever is by actually going out there and using sensory input in real time to incorporate that information into its model of the world.

So all of those – the sensors, the internet and access to accumulated knowledge of mankind is going to make them incredibly smart.

BROOKE GLADSTONE: I want to go back to your distinction between the engineers, who you approve of, and the science fiction freaks, like myself –

[KAPLAN LAUGHS]

- of whom you don’t. Six years before the Dartmouth Conference, Alan Turing, the famous British mathematician, designed his Turing test, which went like this - A human, who was designated as a judge, has a written conversation with the machine and another human for five minutes. Afterwards, if the human judge can’t distinguish between his human correspondent and the machine one, the machine passes the test.

JERRY KAPLAN: I think that the Turing test is really misunderstood. It wasn't really about some kind of bar exam for computers to be intelligent. If you actually go back and read what Turing had to say, he said the original question, can machines think, I believe to be too meaningless to deserve discussion. Nevertheless, I believe that at the end of the century the use of words and generally educated opinion will have altered so much that one will be able to speak of machines “thinking” without expecting to be contradicted.

And he was right. I can say, oh, Siri is thinking about my question, and you’re not confused about whether Siri is some kind of humanlike intelligence. It’s simply the use of the word.

BROOKE GLADSTONE: But he believed the mind was essentially a meat computer, and so have many other people since then. Ray Kurzweil, the visionary that actually foresaw much of the internet, believes that he can reconstruct his father with enough data and that if he can't tell the difference, then there is no difference.

JERRY KAPLAN: Ray is a remarkable scientist, but my point of view – and I’m representing, I think, a certain class of people who believe this – I see very little evidence – none - that machines are going to become conscious.

Let me quote a Dutch computer scientist that is quite famous but most people have never heard of, Edsger Dijkstra. He put this really well, in one sentence. “The question of whether machines can think is about as relevant as the question of whether submarines can swim.” The point is they’re going to do the same thing that you do, that you think of as thinking today. But they’re not going to do it the same way, and it doesn’t matter.

BROOKE GLADSTONE: Perhaps it's doing something that looks a little like what we do but isn't entirely like what we do. I mean, isn't thought about independent intention, not intention that is programmed in by somebody else? Or do you also think that that's just irrelevant, that individuality is one of those magical notions?

JERRY KAPLAN: The notion of you as a unique individual will also apply to these machines because they are going to have their own experiences and they’re going to draw their own conclusions and their own lessons from it.

The real problem comes in if people are going to be attributing to these machines feelings and characteristics which, in my view, legitimately they do not have. And those machines will be in a position to effectively manipulate us, acting in a way that, that evokes emotional reactions in us to providing sympathy for them.

BROOKE GLADSTONE: What danger does it pose?

JERRY KAPLAN: Well, it’s that we include them in the circle of humanity and of mankind. In a way where we sublimate our needs to the needs of, of these machines. And they will want to accomplish certain things. And if the best way to do that is to nag you or to cry at you or to put on a sad face, they might very well do that. They’re going to be able to communicate with other machines, negotiate deals, offer arrangements, do things on your behalf. And that’s going to create a variety of problems for society and, in particular, if we are not careful about the goals that we set and the capabilities that we provide these machines and these AI systems with. They have the potential to wreak havoc on our society.

BROOKE GLADSTONE: I love talking to you, Jerry.

JERRY KAPLAN: Oh, thank you so much, Brooke. It’s a pleasure to talk to you too.

BROOKE GLADSTONE: I only have one final question.

JERRY KAPLAN: Certainly.

BROOKE GLADSTONE: Do you think if you scratch an engineer, underneath you find a science fiction freak? [LAUGHING]

JERRY KAPLAN: [LAUGHS] I would love to give you a snappy answer, but I’ll try it. I’m, I’m doing it right now but I’m –

BROOKE GLADSTONE: [LAUGHS] You have just outlined the plot of about 700,000 science fiction novels, in warning, in a clear and dispassionate way, of the potential hazards of artificial intelligence.

JERRY KAPLAN: You’re absolutely right, and I come back to that IBM statement, “Computers can only do what they’re programmed to do.” That is completely false today. You know, I, I keep thinking of the example of squirrels crossing the road.

BROOKE GLADSTONE: Mm-hmm.

JERRY KAPLAN: It’s like squirrels haven’t evolved to deal with 2,000-pound piles of metal that approach them at 60 miles an hour, and that’s why they get run over. You’re not designed to deal with a threat that’s diffuse in the, in the cloud, that is dealing with you in ways that you may not even realize.

When you look at the two great scourges of the modern world, the first being rampant unemployment and the second being income inequality, I think that a major cause of both of those is the accelerating progress in technology, in general, and in artificial intelligence, in particular.

BROOKE GLADSTONE: But you can’t stop that.

JERRY KAPLAN: No, you can’t stop that. But there’s a lot you can do about it, in other words, not to prevent it but to make the transition to a new world much less bumpy. I think the robot Armageddon is going to be economic, not a physical war.

[MUSIC UP & UNDER]

BROOKE GLADSTONE: Jerry, thank you very much.

JERRY KAPLAN: Okay. Thank you, Brooke.

BROOKE GLADSTONE: Jerry Kaplan is an entrepreneur who teaches the History and Philosophy of Artificial Intelligence at Stanford University.

BROOKE GLADSTONE: Artificial intelligence is a contentious field. To the people who own it, the engineers, it’s about creating systems that can adjust their behavior based on data gleaned from past experience or new information. Systems that can establish sub-goals for themselves to meet the broader goals of their designers, machines that, in their word, “think.”

But philosophers and others object, saying that to call that thinking is to imply that the complex web of sensations and culture that guide our thoughts can be reduced to code and that our minds are merely computers made of meat. Engineers respond with a shrug, a yawn and the observation that the proof is in the processing. Jerry Kaplan is an engineer and entrepreneur and a fellow at the Center for Legal Informatics at Stanford University, He says that the attitude of engineers hasn't changed since the day the term “artificial intelligence” was coined.

JERRY KAPLAN: It was held in the summer of 1956. It was a beautiful lazy summer, and it was a conference that was organized by John McCarthy who named the field “artificial intelligence” at that conference. There were three other organizers, so one was Marvin Minsky who at the time was at Harvard and is still kicking around at MIT, as you might know.

BROOKE GLADSTONE: Mm-hmm. [AFFIRMATIVE]

JERRY KAPLAN: Claude Shannon, famous as the father of information theory, and the fourth guy was Nathaniel Rochester, who was the manager of information research at IBM at the time.

BROOKE GLADSTONE: What were they there to talk about?

JERRY KAPLAN: I can actually read a little bit of it. The proposal said, “The study is to proceed on the basis of the conjecture that every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it.” And they went on to say, “We think that a significant advance can be made in one or more of these problems if a carefully selected group of scientists work on it together for a summer.”

BROOKE GLADSTONE: [LAUGHS] These men were not lacking in self-confidence, were they?

JERRY KAPLAN: They were not, and after the conference they made a number of wild predictions and got a great deal of publicity, which ruffled feathers at IBM Research.

The sales force was visiting customers, they were reading about this, and they were getting worried.

BROOKE GLADSTONE: What was IBM selling in 1956?

JERRY KAPLAN: Data processing equipment and really the only company or the primary company that was selling equipment of that kind. But the problem was that the customers were getting nervous about this stuff, because they were concerned that these machines were somehow going to take over their own jobs. IBM decided, in order to avoid this problem, they would close down their AI Group and then they armed their sales force with a response to this concern. And they came up with this little cliché that was, “Computers can only do what they are programmed to do.”

[BROOKE LAUGHS]

And that concept has set the tone, in my opinion, for the last 50 years of the way in which people tend to think of computers. And, unfortunately, it’s no longer true.

BROOKE GLADSTONE: You also sort of hinted that it might be a threat, but I guess you’re talking about to people's jobs, potentially, not to their actual lives or autonomy.

JERRY KAPLAN: Well, the impacts on the job market are going to be extreme. There’s a study that estimated that 47% of the US working population, that their jobs will, in the next five to ten years, come under potential threat of being completely automated. The people who are building these systems are going to have a unique advantage, in terms of skimming off the increased economic value that they’ll be providing to society. So it's going to have a significant impact, and already is having a significant impact, on income inequality.

BROOKE GLADSTONE: So the alphas will get richer and richer and, and the lower castes will just shuffle along with no purpose and not much money.

JERRY KAPLAN: Both the upper and the lower castes. This is the peculiar thing about it. We’re talking about lawyers and doctors, as well as the people to dig ditches and lay pipe. Really, we’re gonna find out that Karl Marx was right. The struggle between capital and labor is very real, but capital is going to win hands down.

BROOKE GLADSTONE: So what’s the distinction between strong AI and weak AI?

JERRY KAPLAN: Strong AI is basically a concept that intelligence is some kind of magic pixie dust that you sprinkle on a machine and it suddenly becomes conscious and aware, and that what we’re trying to do in AI is to duplicate this magical quality.

Now, weak AI is just a disparaging name for what we really see today, which is an engineering approach to artificial intelligence. It's solving specific problems. That might be driving or playing chess. So strong AI is really for the sci-fi freaks, and weak AI is really for the engineers.

BROOKE GLADSTONE: Let’s talk about progress in weak AI. There’s been a lot of it lately, right?

JERRY KAPLAN: Absolutely. What’s driving a revolution in artificial intelligence right now are really three fundamental things, dramatic improvements in computing power, in moeory and networking. Just by way of example, the Watson program that won Jeopardy –

BROOKE GLADSTONE: Mm-hmm.

JERRY KAPLAN: - that had four terabytes of memory available to it to store all of its knowledge. If you look on Amazon today, you can buy 4 terabytes for $150. So we’re talking about a speed of improvement that is utterly astonishing.

The second thing really is breakthroughs in the research in what is commonly called machine learning - these are systems that lean by experience and learn by example – which leads me to the third point. What the internet provides is easy access to enormous mountains of data that can be used to train these systems. So the availability of that data has transformed artificial intelligence.

BROOKE GLADSTONE: When we talk about training, we aren’t really talking about just collecting more and more facts and information, right?

JERRY KAPLAN: It, it really means exactly the same thing. It means when you talk about like training your children, there’s kind of objective written facts that one can study, and that’s why they go to school. There’s things that you tell them, informal knowledge about the way the world really works that’s based on your own experience. That’s commonly called culture, things that you pass on to your children. But the third is simply interacting with the world. So the way your autonomous car is going to learn about the way your driveway’s shaped or whatever is by actually going out there and using sensory input in real time to incorporate that information into its model of the world.

So all of those – the sensors, the internet and access to accumulated knowledge of mankind is going to make them incredibly smart.

BROOKE GLADSTONE: I want to go back to your distinction between the engineers, who you approve of, and the science fiction freaks, like myself –

[KAPLAN LAUGHS]

- of whom you don’t. Six years before the Dartmouth Conference, Alan Turing, the famous British mathematician, designed his Turing test, which went like this - A human, who was designated as a judge, has a written conversation with the machine and another human for five minutes. Afterwards, if the human judge can’t distinguish between his human correspondent and the machine one, the machine passes the test.

JERRY KAPLAN: I think that the Turing test is really misunderstood. It wasn't really about some kind of bar exam for computers to be intelligent. If you actually go back and read what Turing had to say, he said the original question, can machines think, I believe to be too meaningless to deserve discussion. Nevertheless, I believe that at the end of the century the use of words and generally educated opinion will have altered so much that one will be able to speak of machines “thinking” without expecting to be contradicted.

And he was right. I can say, oh, Siri is thinking about my question, and you’re not confused about whether Siri is some kind of humanlike intelligence. It’s simply the use of the word.

BROOKE GLADSTONE: But he believed the mind was essentially a meat computer, and so have many other people since then. Ray Kurzweil, the visionary that actually foresaw much of the internet, believes that he can reconstruct his father with enough data and that if he can't tell the difference, then there is no difference.

JERRY KAPLAN: Ray is a remarkable scientist, but my point of view – and I’m representing, I think, a certain class of people who believe this – I see very little evidence – none - that machines are going to become conscious.

Let me quote a Dutch computer scientist that is quite famous but most people have never heard of, Edsger Dijkstra. He put this really well, in one sentence. “The question of whether machines can think is about as relevant as the question of whether submarines can swim.” The point is they’re going to do the same thing that you do, that you think of as thinking today. But they’re not going to do it the same way, and it doesn’t matter.

BROOKE GLADSTONE: Perhaps it's doing something that looks a little like what we do but isn't entirely like what we do. I mean, isn't thought about independent intention, not intention that is programmed in by somebody else? Or do you also think that that's just irrelevant, that individuality is one of those magical notions?

JERRY KAPLAN: The notion of you as a unique individual will also apply to these machines because they are going to have their own experiences and they’re going to draw their own conclusions and their own lessons from it.

The real problem comes in if people are going to be attributing to these machines feelings and characteristics which, in my view, legitimately they do not have. And those machines will be in a position to effectively manipulate us, acting in a way that, that evokes emotional reactions in us to providing sympathy for them.

BROOKE GLADSTONE: What danger does it pose?

JERRY KAPLAN: Well, it’s that we include them in the circle of humanity and of mankind. In a way where we sublimate our needs to the needs of, of these machines. And they will want to accomplish certain things. And if the best way to do that is to nag you or to cry at you or to put on a sad face, they might very well do that. They’re going to be able to communicate with other machines, negotiate deals, offer arrangements, do things on your behalf. And that’s going to create a variety of problems for society and, in particular, if we are not careful about the goals that we set and the capabilities that we provide these machines and these AI systems with. They have the potential to wreak havoc on our society.

BROOKE GLADSTONE: I love talking to you, Jerry.

JERRY KAPLAN: Oh, thank you so much, Brooke. It’s a pleasure to talk to you too.

BROOKE GLADSTONE: I only have one final question.

JERRY KAPLAN: Certainly.

BROOKE GLADSTONE: Do you think if you scratch an engineer, underneath you find a science fiction freak? [LAUGHING]

JERRY KAPLAN: [LAUGHS] I would love to give you a snappy answer, but I’ll try it. I’m, I’m doing it right now but I’m –

BROOKE GLADSTONE: [LAUGHS] You have just outlined the plot of about 700,000 science fiction novels, in warning, in a clear and dispassionate way, of the potential hazards of artificial intelligence.

JERRY KAPLAN: You’re absolutely right, and I come back to that IBM statement, “Computers can only do what they’re programmed to do.” That is completely false today. You know, I, I keep thinking of the example of squirrels crossing the road.

BROOKE GLADSTONE: Mm-hmm.

JERRY KAPLAN: It’s like squirrels haven’t evolved to deal with 2,000-pound piles of metal that approach them at 60 miles an hour, and that’s why they get run over. You’re not designed to deal with a threat that’s diffuse in the, in the cloud, that is dealing with you in ways that you may not even realize.

When you look at the two great scourges of the modern world, the first being rampant unemployment and the second being income inequality, I think that a major cause of both of those is the accelerating progress in technology, in general, and in artificial intelligence, in particular.

BROOKE GLADSTONE: But you can’t stop that.

JERRY KAPLAN: No, you can’t stop that. But there’s a lot you can do about it, in other words, not to prevent it but to make the transition to a new world much less bumpy. I think the robot Armageddon is going to be economic, not a physical war.

[MUSIC UP & UNDER]

BROOKE GLADSTONE: Jerry, thank you very much.

JERRY KAPLAN: Okay. Thank you, Brooke.

BROOKE GLADSTONE: Jerry Kaplan is an entrepreneur who teaches the History and Philosophy of Artificial Intelligence at Stanford University.

Hosted by Brooke Gladstone

Produced by WNYC Studios