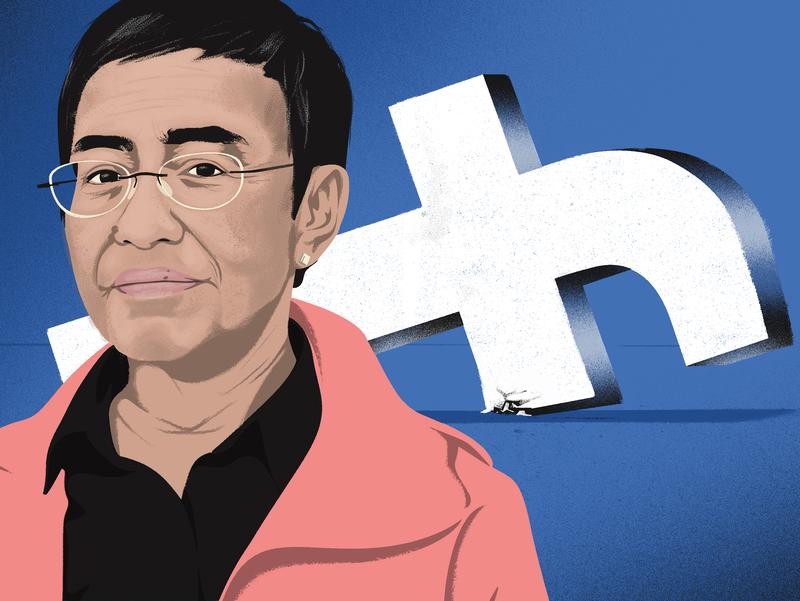

The Nobel Prize Winner Maria Ressa on the Turmoil at Facebook

David: The trickle of bad news about Facebook or as it's just been rebranded, Meta, has turned into a torrent. Weeks ago we learned that the company knows that Instagram, for example, which it owns, causes serious mental distress for many teenagers, and the release of thousands of company documents known as the Facebook papers contains a staggering number of missteps and misdeeds.

Facebook's indifference to how it accelerates the circulation of toxic and false information, its participation in corroding civil and democratic society are now just impossible to ignore. The journalist Maria Ressa shared a Nobel Peace Prize in October for her work to protect freedom of expression.

Maria Ressa: Hi.

David: Here we are. Maria, how are you?

Maria Ressa: Good. It's one of those days of cascading meetings and--

David: Ressa runs the digital news organization, Rappler, which is based in the Philippines. She's been the target of hate campaigns by supporters of President Rodrigo Duterte over and over again. At first, Ressa believed Facebook would be critical to the success of her enterprise in journalism in general, but she soon became one of Facebook's most vocal and informed critics.

I want to read to you something that you've said, and I want to expand on it. You said this. "I think that the rollback of democracy globally and the tearing apart of shared reality has been because of tech. It's because news organizations lost our gatekeeping powers to technology and technology took the revenues that we used to have, and they took the powers, but they abdicated responsibility. These revelations have made clearer-- "They've been clear to many, but clearer what the problem is with Facebook and social media in the way you've been describing it.

I guess the next question we have to ask is what is to be done, to repeat the famous question. Facebook, not to do their work for them, but Facebook would charge to the microphone and say, "Look, we have spent millions upon millions of dollars to do better. We've essentially hired a kind of supreme court to adjudicate some of these difficult questions and hired and brought in some extremely prestigious minds from the world of journalism and academia and all the rest. We have tried to strip away as much hate speech as we possibly can, but it comes in a torrent. We can't get everything." Do you have any sympathy for this argument?

Maria Ressa: None.

David: None, so go ahead.

Maria Ressa: None, and I get it on both ends because what Facebook has enabled is an environment where my government has been able to file 10 arrest warrants against me in less than two years. Now Facebook, these companies didn't set out to be news organizations. They actually don't have standards and ethics or the mission to protect the public sphere, but that's also where regulation must come in, because that is exactly what they're doing.

These platforms are the connective tissue of society. These platforms determine our reality. They exploit the weaknesses of our biology to actually shift behavior. To shift the way you think. If the way you think shifts, then the way you behave shifts. January 6 is a perfect example of that. I don't even know where to begin. [laughs]

David: You're doing great. Keep charging on. Go ahead. You said January 6th was a perfect example.

Maria Ressa: Again, was that a surprise for any of us who live on Facebook? It wasn't because we were seeing this. Think of it like-- Sorry, I'm going to do three assumptions that the platforms do that just drive me crazy as a journalist. The first is that if you don't like something, if a lie is told about you, you just don't like something, mute it. If it's a lie that is tearing down your credibility, does muting it help? Number one. Number two, that more and faster is better. It really isn't and that's part of it. I think the friction that is necessary to stop the spread of lies isn't even in the calculation because that's not in the design.

Then I think the third part is that all of us have our own realities. Which democracy can survive when you have almost 3 billion Truman shows and we are each performing, not to mention, the impact on the individual who is performing? That shift in behavior is real and it is exploited by governments like mine.

David: If Facebook is incapable of, for all the reasons we know, of dealing with this torrent, the next step is the government, in some way, being in charge of that? I think both you and I were raised to think that the government being in charge of determining what is fact and what is not fact and what is legitimate speech and what is hate speech is complicated, to say the least.

Maria Ressa: That's not what they should be doing. It's not about content and that's where you run into the free speech issues. It's because that's downstream. Go upstream to the problem, the design of the platform, there must be radical transparency for what is going into these algorithms that are determining our realities. If you go there, you bypass this. Why are fake accounts being allowed to proliferate at the scale that they do? Even as early as 2017, Facebook's footnotes and its disclosure said that the Philippines had more than the normal average of fake accounts.

They know when an account is fake so it's like playing a Whac-A-Mole game. Legislation should deal with fraud. It should deal with the same laws that are-- The actions that are illegal in the real world should be mirrored in the virtual world. You don't have to recreate reality, you don't have to have new definitions. Part of our problem, I think, is that we're trying to find new words for that algorithmic bias, because in the end while the platforms will say, the algorithms are a machine, they are programmed and they reflect the biases of the programmer, which is also why my country's coded bias. I don't know if you saw that film.

There's an MIT student who couldn't do an AI experiment until she put a white face on because of the coded bias. Part of the problem is that these American social media platforms, these American companies have exported their bias and it is insidiously built into our Philippines, any other country's, information ecosystem.

David: A lot of people are calling for the breaking up of Facebook and for Mark Zuckerberg, if not to step down entirely, to be in charge of a realm of Facebook that would be much diminished. How do you look at that problem, and would that solve anything?

Maria Ressa: I don't know the right answer to that because I do think that we're Facebook country, the Philippines. 100% of Filipinos on the internet are on Facebook. I believed in the power of Facebook before they betrayed my beliefs. When we started Rappler in 2012, our rallying cry, we asked people to go on Facebook. Our rallying cry was social media for social good. Social media for social change. I had hoped that we could use technology to jumpstart development and build institutions bottom up.

David: You feel betrayed by that?

Maria Ressa: Yes, I was naive. I didn't think that the money-- because in the end, they're using a scorched earth policy. The same way that the Duterte administration is using a scorched earth policy. The generation after is going to have to pay for everything that's been done now. That's the same thing that Facebook is doing. It doesn't make sense unless the money in the short term is worth it for them. Let me put it this way--

David: Do you believe that Mark Zuckerberg and Sheryl Sandberg and company blundered into the problems that they've got now or they knew all along exactly what they were doing and didn't care?

Maria Ressa: I can't call them evil because not yet. Because that's what it is. That is it that surveillance capitalism is can you do it even if people are dying? Although I ask this question all the time, who is responsible for genocide in Myanmar? It isn't just the military. It isn't just the groups that seeded that, or the killings in the Philippines, which were enabled. They changed reality. They saw it coming, but by digging in his heels, and I guess you go back to Mark Zuckerberg and the power he has.

When I met him, I was struck by how smart-- He is intelligent, David. He knows the technology, but what he didn't realize, I think, was the impact of this. He looks at it in terms of-- Do you remember that quote of his, "It's only 1%?"

David: Yes. I think what Zuckerberg was getting at is his claim that less than 1% of what's on Facebook is fake news.

Maria Ressa: 1% of how much is how much and what is the impact of that? For a journalist, one error is too much. It's like saying, "Oh, well, we can have some poison in the environment." Let's say it's a body. In our body, we can have poison in there, but that poison spreads and it will kill.

David: It's 1% cyanide in your dinner. Maria, do you extend your critique of social media to Twitter and Google as well as Facebook equally?

Maria Ressa: I do, but each platform is different. I will say for search. The page ranking, if you look at all of the things that are there, it's better thought out. It has a lot more inputs into it and they are more transparent.

David: We're talking about Google now.

Maria Ressa: Yes. Google search. YouTube, on the other hand, could do with a bit more. In the Philippines, YouTube is now number one. Twitter in the United States is different from the Philippines. I feel more protected on Twitter than I do on Facebook, and that's why you'll see me there more than I am on Facebook, even though Facebook is essentially still our internet.

David: Was the initial conceit the original scene? In other words, the original conceit of Facebook was, "We are not a publisher, we're merely a platform. We're providing a global hide park corner where everybody can say what they want and exchange information where they want. Was that the original scene?

Maria Ressa: I think the original scene was when they got rid of-- It worked really well when it was Facebook and you had to have your campus ID. You knew who you were, and the newsfeed was chronological. That's the first. Then they tweaked it over time, and the newsfeed is now no longer chronological, the personalization part. That's a tech construct again. It's personalization.

Think about it, David. Did that make sense that you are given everything you want, that your cognitive bias is fueled by more of what you want? Is that really the right thing? I think this is part of the reason our values of our world are slightly turned upside down because we've adapted this hook, line, and sinker "personalization is better". Oh, yes, okay. Well, it does make the company more money, but is that the right thing? Because personalization also tears apart a shared reality.

David: Maria Ressa runs the news site Rappler in the Philippines.

Copyright © 2021 New York Public Radio. All rights reserved. Visit our website terms of use at www.wnyc.org for further information.

New York Public Radio transcripts are created on a rush deadline, often by contractors. This text may not be in its final form and may be updated or revised in the future. Accuracy and availability may vary. The authoritative record of New York Public Radio’s programming is the audio record.