Geoffrey Hinton on How Neural Networks Revolutionized AI

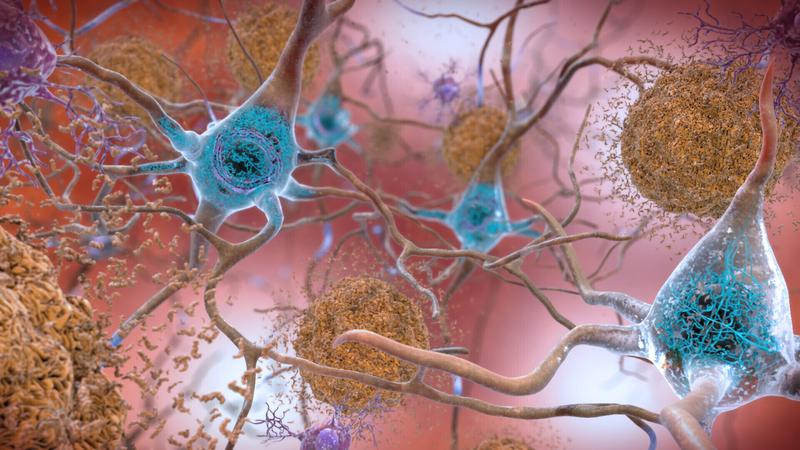

( National Institute on Aging, NIH / Associated Press )

This is On the Media. I'm Brooke Gladstone. If you show a three-year-old child a picture and ask them what's in it, you'll get pretty good answers.

Child 1: Okay, that's a cat sitting in a bed.

Child 2: The boy is petting their elephant.

Child 3: Those are people, they're going on an airplane.

Child 4: That's a big airplane.

Brooke Gladstone: Those are clips from a 2015 TED Talk by Fei-Fei Li, a Computer Science Professor at Stanford University. She was consumed by the fact that despite all of our technological advances, our fanciest gizmos can't make sense of what they see.

Fei-Fei Li: Our most advanced machines and computers still struggle at this task.

Brooke Gladstone: In 2010, she started a major computer vision competition called the ImageNet Challenge, where software programs compete to correctly classify and detect objects and scenes. Contestants submit AI models that have been trained on millions of images organized into thousands of categories. Then the model is given images it's never seen before and asked to classify them. In 2012, a pair of doctoral students named Alex Krizhevsky and Ilya Sutskever entered the competition with a neural network architecture called AlexNet, and the results were astounding.

Geoffrey Hinton: They did much better than the existing technology, and that made a huge impact.

Brooke Gladstone: Geoffrey Hinton was their PhD advisor at the University of Toronto and collaborator on AlexNet. When we spoke in January, Geoff was still working at Google, but in May, he publicly left the company, specifically so that he could--

Geoffrey Hinton: Blow the whistle and say, we should worry seriously about how we stop these things getting control over us. It's going to be very hard, but for the existential threat of AI taking over, we're all in the same boat. It's like nuclear weapons. If there's a nuclear war, we all lose.

Brooke Gladstone: The warnings hit differently coming from Geoffrey Hinton because he's had a hand in pushing AI along as an explorer and developer of AI technology, especially the architecture of neural networks, since the '70s. It actually began when a high school friend started talking to him about holograms and the brain.

Geoffrey Hinton: Holograms had just come out, and he was interested in the idea that memories are distributed over the whole brain, so your memory of a particular event involves neurons in all sorts of different parts of the brain. That got me interested in how memory works.

Brooke Gladstone: Hologram, meaning a picture or a more, for lack of a better word, holistic way of storing information as opposed to just words. Is that what you mean?

Geoffrey Hinton: No. Actually, a hologram is a holistic way of storing an image as opposed to storing it pixel by pixel. When you store it pixel by pixel, each little bit of the image is stored in one pixel. When you store it in a hologram, every little bit of the hologram stores the whole image. You can take a hologram and cut it in half and you still get the whole image, it's just a bit fuzzier. It just seemed like a much more interesting idea than something like a filing cabinet, which was the normal analogy, where the memory of each event is stored as a separate file in the filing cabinet.

Brooke Gladstone: There was somebody named Karl Lashley, you said, who took out bits of rats' brains and found that the rats still remembered things.

Geoffrey Hinton: Yes. Basically, what he showed was that the memory for how to do something isn't stored in any particular part of the brain. It's stored in many different parts of the brain. In fact, the idea that, for example, an individual brain cell might store a memory doesn't make a lot of sense because your brain cells keep dying and each time a brain cell dies, you don't lose one memory.

Brooke Gladstone: This notion of memory, this holographic idea, was very much in opposition to conventional symbolic AI, which was all the rage in the last century.

Geoffrey Hinton: Yes, you can draw a contrast between two completely different models of intelligence. In the symbolic AI model, the idea is you store a bunch of facts as symbolic expressions, a bit like English but cleaned up so it's not ambiguous. You also store a bunch of rules that allow you to operate on those facts, and then you can infer things by applying the rules to the known facts to get new known facts. It's based on logic, how reasoning works, and then they take reasoning as to be the core of intelligence.

There's a completely different way of doing business, which is much more biological, which is to say we don't store symbolic expressions. We have great big patterns of activity in the brain, and these great big patterns of activity, which I call vectors, these vectors interact with one another and these are much more like holograms.

Brooke Gladstone: You've got these vectors of neural activity.

Geoffrey Hinton: For example, large language models that lead to big chatbots are all the rage nowadays. If you ask, how do they represent words or word fragments? What they do is they convert a symbol that says it's this particular word into a big vector of activity that captures lots of information about the word. They'd convert the word cat into a big vector, which is sometimes called an embedding, that is a much better representation of cat than just a symbol. All the similarities of things are conveyed by these embedding vectors. Very different from a symbol system. The only property a symbol has is that you can tell whether two symbols are the same or different.

Brooke Gladstone: I'm thinking of Moravec's Paradox, which I understand is the observation by AI and robotics researchers that reasoning actually requires very little computation, but a lot of sensorimotor and perception skills. He wrote in '88, "It's comparatively easy to make computers exhibit adult-level performance on intelligence tests or playing checkers, and difficult or impossible to give them the skills of a one-year-old when it comes to perception and mobility."

I just wonder, do you think machines can ever think until they can get sensorimotor information built into those systems?

Geoffrey Hinton: There's two sides to this question: a philosophical side and a practical side. Philosophically, I think, yes, machines could think without any sensorimotor experience, but in practice, it's much easier to build an intelligent system if it has sensory input. There's all sorts of things you learn from sensory input, but the big language models that lead to these chatbots, many of them just have language as their input.

One thing you said at the beginning of this question was that reasoning is easy and perception is hard. I'm paraphrasing. That was true when you used symbolic AI, when you try to do everything by having explicit facts and rules to manipulate them. Perception turned out to be much harder than people thought it would be. As soon as you have big neural networks that learn and learn these big vectors, it turns out one kind of reasoning is particularly easy, and it's the kind that people do all the time and is most natural for people, and that's analogical reasoning.

Brooke Gladstone: Analogical reasoning, one thing is like another.

Geoffrey Hinton: Yes, we're very good at making analogies.

Brooke Gladstone: You went on to study psychology, and your career in tech, in which you are responsible for something that amounts to a revolution in AI, was an accidental spinoff of psychology. You went on to get a PhD in AI in the '70s at the oldest AI research center in the UK that was the University of Edinburgh. You were in a place where everyone thought that what you were doing, studying memory as multiple stable states in a system, wouldn't work. That, in fact, what you were doing, studying neural networks, was resolutely anti-AI. You weren't a popular guy, I guess.

Geoffrey Hinton: That's right. Back then, neural nets and AI were seen as opposing camps. It wasn't until neural nets became much more successful than symbolic AI that all the symbolic AI people started using the term AI to refer to neural nets so they could get funding.

Brooke Gladstone: When explaining the difference for a non-technical person between what a neural network is and why it was revolutionary compared to symbolic AI, a lot of it hinges around what you think a thought is.

Geoffrey Hinton: I recently listened to a podcast where Chomsky repeated his standard view that thought and language are very close. Whatever thought is, it's quite similar to language. I think that's complete nonsense. I think Chomsky has misunderstood how we use words. If we were two computers and we had the same model of the world, then it would be very useful, one computer telling the other computer which neurons were active, and that would convey from one computer to another what the first computer was thinking. All we can do is produce sound waves or written words or gestures. That's the main way we convey what we're thinking to other people. A string of words isn't what we're thinking. A string of words is a way of conveying what we're thinking. It's the best way we have because we can't directly show them our brain states.

Brooke Gladstone: I once had a teacher who said, if you can't put it into words, then you don't really understand it.

Geoffrey Hinton: I think there were all sorts of things you can't put into words that your teacher didn't understand.

Brooke Gladstone: [laughs] The only place words exist is in sound waves and on pages.

Geoffrey Hinton: The words are not what you operate on in your head to do thinking. It's these big vectors of activity. The words are just pointers to these big vectors of activity. They're the way in which we share knowledge. It's not actually a very efficient way to share knowledge, but it's the best we've got.

Brooke Gladstone: Today you're considered a kind of Godfather of AI. There's a joke that everyone in the field has no more than six degrees of separation from you. You went on to become a professor at the Computer Science Department at the University of Toronto, which helped turn Toronto into a tech hub. Your former students and postdoctoral fellows include people who are today now leading the field. What's it like being called the godfather of a field that rejected you for the majority of your career?

Geoffrey Hinton: It's pleasing.

Brooke Gladstone: And now all the big companies are using neural nets.

Geoffrey Hinton: Yes.

Brooke Gladstone: How do you define thinking, and do you think machines can do it? Is there a point in comparing AI to human intelligence?

Geoffrey Hinton: Well, a long time ago, Alan Turing, I think he got fed up with people telling him machines couldn't possibly think because they weren't human, and defined what's called the Turing Test. Back then, you had teletypes, and you would type to the computer the question and it would answer the question. This was a sort of thought experiment. If you couldn't tell the difference between whether a person was answering the question or whether the computer was answering the question, then Alan Turing said you better believe the computer is intelligent.

Brooke Gladstone: I admire Alan Turing, but I never bought that. I don't think it proves anything. Do you buy the Turing Test?

Geoffrey Hinton: Basically, yes. There's problems with it, but it's basically correct. The problem is, suppose someone is just adamantly determined to say machines can't be intelligent. How do you argue with them? Because nothing you present to them satisfies them that machines are intelligent.

Brooke Gladstone: I don't agree with that either. I could be convinced if machines had the hologram-like web of experience to draw from, the physical as well as the mental and computational.

Geoffrey Hinton: The neural nets are very holistic. Let me give you an example from ChatGPT. There's probably better examples from some of the big Google models, but ChatGPT is better publicized. You ask ChatGPT to describe losing one's sock in the dryer in the style of the Declaration of Independence. It ends up by saying that all socks are endowed with certain inalienable rights by their manufacturer. Now why did it say, manufacturer? Well, it understood enough to know that socks are not created by God. They're created by manufacturers. If you're saying something about socks, but in the style of the Declaration of Independence, the equivalent of God is the manufacturer. It understood all that because it has sensible vectors that represent socks and manufacturers and God and creation.

That's an example of a kind of holistic understanding, an understanding via analogies that's much more human-like than symbolic AI and that is being exhibited by ChatGPT.

Brooke Gladstone: And that in your view is tantamount to thinking, it is thinking.

Geoffrey Hinton: That's intuitive thinking. What neural nets are good at is intuitive thinking. The big chatbots aren't so good at explicit reasoning, but then nor are people. People are pretty bad at explicit reasoning.

Brooke Gladstone: We don't have identical brains. Our brains run at low power, about 30 Watts, and they're analog. We're not as good at sharing information as computers are.

Geoffrey Hinton: You can run 10,000 copies of a neural net on 10,000 different computers, and they can all share their connection strengths because they all work exactly the same way, and they can share what they learned by sharing their weights, their connection strengths. Two computers that are sharing a trillion weights is an immense bandwidth of information between the two computers. Whereas two people who are just using language have a very limited bottleneck.

Brooke Gladstone: Computers are telepathic.

Geoffrey Hinton: It's as if computers are telepathic, right.

Brooke Gladstone: Were you excited when ChatGPT was released? We've been told, it isn't really a huge advancement. It's just out there for the public.

Geoffrey Hinton: In terms of its abilities, it's not significantly different from a number of other things already developed, but it made a big impact because they did a very good job of engineering it so it was easy to use.

Brooke Gladstone: Are there potential implementations of AI that concern you?

Geoffrey Hinton: People using AI for autonomous lethal weapons. The problem is that a lot of the funding for developing AI is by governments who would like to replace soldiers with autonomous lethal weapons, so the funding is explicitly for hurting people. That concerns me a lot.

Brooke Gladstone: That's a pretty clear one. Is there something subtler about potential applications that give you pause?

Geoffrey Hinton: I'm hesitant to make predictions beyond about five years. It's obvious that this technology is going to lead to lots of wonderful new things. As one example, AlphaFold, which predicts the 3D shape of protein molecules from the sequence of bases that define the molecule, that's extremely useful and is going to have a huge effect in medicine. There's going to be a lot of applications like that. They're going to get much better at predicting the weather. Not beyond 20 days or so, but predicting the weather in like 10 days' time, I think these big AI systems are already getting good at that. There's just going to be huge numbers of applications.

In a sensible society, this would all be good. It's not clear that everything's going to be good in the society we have.

Brooke Gladstone: What about the singularity, the idea that what it means to be human could be transformed by a breakthrough in artificial intelligence or a merging of human and artificial intelligence into a kind of transcendent form?

Geoffrey Hinton: I think it's quite likely we'll get some kind of symbiosis. AI will make us far more competent. I also think that the stuff that's already happened with neural nets is changing our view of what we are. It's changing people's view from the idea that the essence of a person is a deliberate reasoning machine that can explain why it arrive to conclusions. The essence is much more a huge analogy machine that's forever making analogies between a gazillion different things to arrive at intuitive conclusions very rapidly. That seems far more like our real nature than reasoning machines.

Brooke Gladstone: Have you ever had a flight of fancy of what this ultimately might mean in how we live?

Geoffrey Hinton: That's beyond five years.

Brooke Gladstone: You're right. I see [crosstalk]--

Geoffrey Hinton: I have no idea.

Brooke Gladstone: You warned me. Geoffrey, thank you very much.

Geoffrey Hinton: Okay.

Brooke Gladstone: Geoffrey Hinton is a former engineering fellow at Google Brain. He resigned in May, and has been voicing his concerns about the impending AI arms race and the lack of protection ever since. Coming up, with great computer power comes great responsibility. This is On the Media.